https://youtu.be/vDYxYkIb-us

Intro to AI

AI systems like GPT are “neural networks.” They are structured similarly to brains, but they can process information much faster than human brains. Nobody fully understands how they work, including the people who built them. There are many kinds of AI systems, but the best-known are large language models (LLMs), like GPT4.

Current progress in AI

GPT4 was released in March, 2023. It is groundbreaking—it is intelligent in ways that have never been seen before in a computer. GPT4 has common sense and the ability to reason. It is not just “copy-paste on steroids.”

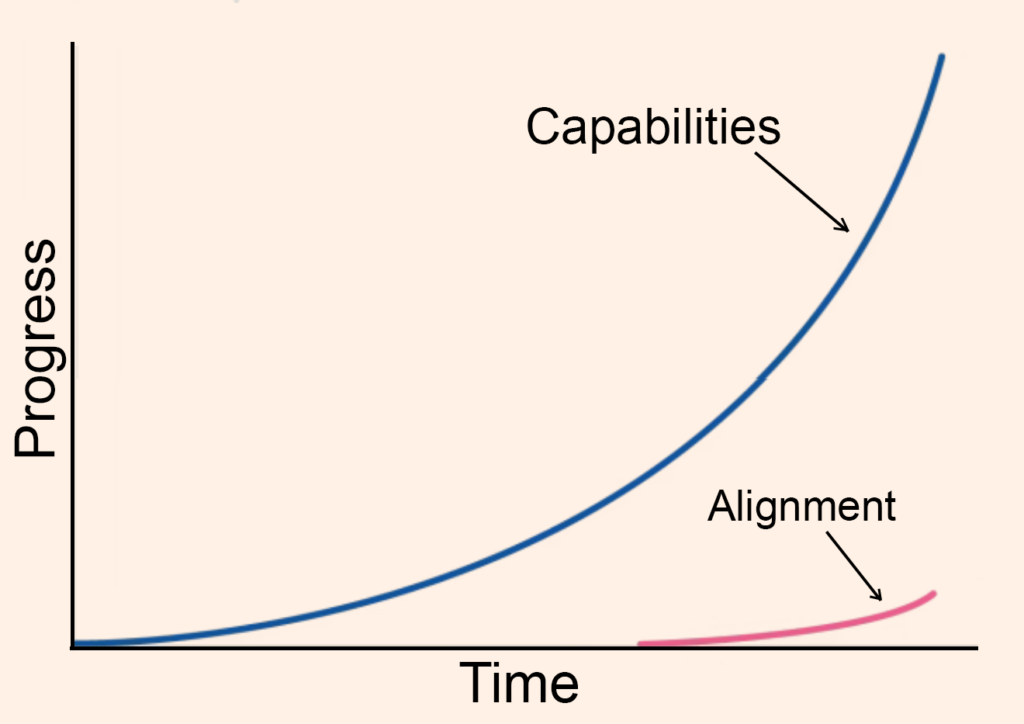

As shown by the blue line in the graph to the right, we are seeing rapid progress in the capabilities of AI systems. When an AI becomes capable enough at learning and improving itself without the guidance of humans, many experts believe that it could “take off,” improving itself with few limitations and becoming “superintelligent.”

The red line in the graph represents the progress in alignment research, which can be thought of as our understanding of how to make AI systems safe.

Graph reproduced from Financial Times: Ian Hogarth (April 12, 2023), “We must slow down the race to God-like AI.”

Benefits and Harms

Leading experts have stated clearly that AI holds enormous potential—including helping humanity solve many of its most difficult problems.

Curing cancer, having food and a house for everyone, freeing people from dangerous work, creating sustainable energy solutions—these are all real possibilities, and having highly powerful AI on our side could make a huge difference in helping us achieve these things.

Unfortunately, the negative possibilities are just as astounding. Let us share a statement published on May 30, 2023 by the Center for AI Safety:

“Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.”

This statement was signed by a coalition of world-leading AI experts, including the leaders of Microsoft, Google DeepMind, OpenAI (the creators of GPT), and many other top scientists and engineers from universities and AI companies around the world.

How could AI be so dangerous?

Misinformation, job loss, fraud—these are big problems that are already happening. Weaponization will also likely be a growing problem. AI systems could, for example, be used to design pandemic-class viruses. An AI system could also get out of human control and do things that nobody intended, including replicating itself, creating its own goals, and seeking to influence the world in ways that harm humans. This could lead to major disruptions in both the digital and the physical world, and even the loss of human life on a large scale. These are the opinions of many world-leading AI engineers and scientists, not a fringe minority.

Who is in charge of this?

Which direction things go with AI depends on how the process is managed. Currently, keeping AI systems safe has been left largely up to private companies. There is little regulatory oversight: companies can deploy AI systems without knowing exactly how their AI works or how powerful it really is; or whether or not it can make copies of itself, generate its own goals, or intentionally lie to people; or whether or not it’s conscious!

There is some government interest in this issue, but it is not keeping pace with the rapid changes happening in the AI industry and the risks these changes create.

The change we want

Let us be clear: we are not blaming any company for anything. We are pointing out critical weaknesses in the system by which people are building increasingly powerful AI.

The lack of clear standards and safety protocols is completely inappropriate. Governments need to understand the potential dangers of AI and regulate the AI industry to minimize those dangers. We would like to see a well-resourced international governing body created specifically for this purpose.

We don’t have the expertise to tell you specifically how AI regulations should be set up. Our role is simpler than that. We aim to raise awareness of the potential dangers of AI, and to put pressure on governments and AI companies to take the issue of AI safety deadly seriously.

Will this advocacy work?

It has a good chance. Tension is building on this topic. Many experts are voicing severe concerns about AI online and in the news, but regular people are not raising their voices—yet! There is a good chance we can have a real impact, given how huge this issue is! We don’t know how much we can affect governments and AI companies, but the only way to find out is to try!

Please support us by following us on social media and taking part in our events! One of the most meaningful ways you can help us is to donate. The more money we have, the more we can do, and the faster and bigger we can do it.

Thank you for considering supporting us!